Algorithms, Machine Learning, Artificial Intelligence and us Designers

A transcript of the Interaction Design London meetup

Yesterday I attended the Interaction Design London meetup, about “Algorithms, Machine Learning, AI and us designers“.

Below some notes I have taken during the talks, and some contextual links.

The slides will not be published probably, but there are a couple of twitter lives available.

“Interaction Designers vs The Machine, or how I stopped worrying and learned to love algorithms”

Giles Colborne (Managing Director at CX Partners – @gilescolborne)

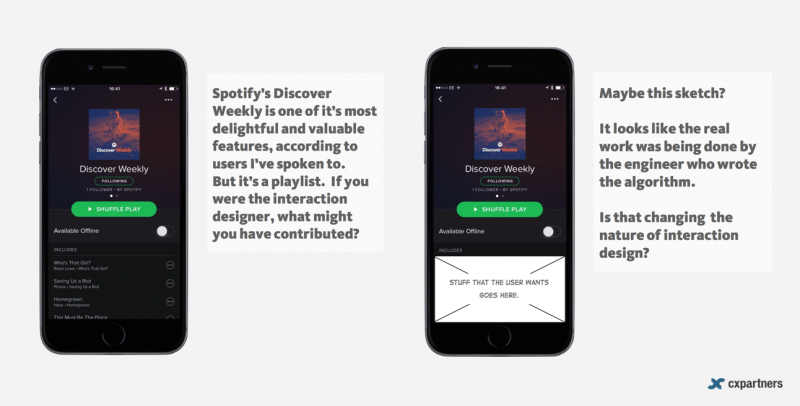

Spotify sends the “discover weekly” to people. people loves it, some people says is the most valuable feature of Spotify. but what about the design: it’s just a playlist as the others. where is the value? in the algorithm that generates the “things that user want”.

An excerpt from a similar presentation by the same author.

Let’s talk about the train booking apps. Whatever you do, is impossible to satisfy every user need in a single interface. The solution? A chat bot, a machine that uses natural language to understand what each user wants/needs and satisfy his/her specific needs.

Taxi drivers will be replaced by algorithms. Trading and fund managements bots outperform human traders.

The next Rembrandt is a movie about a “new” Rembrandt, painted by a Artificial Intelligence algorithm – https://www.nextrembrandt.

Google DeepMind has developed an app that when you take a photo of food can calculate the calories intake of that meal.

As designers, these are the solutions we should aim at:

Shortening tasks – Wells Fargo has developed a simple algorithm that predefines how much you usually take at a cash machine, without the users needing to input data. It saves in average 5 minutes in a year. Not much, right? But this delightful interaction designs makes so that people recommends the bank to other people.

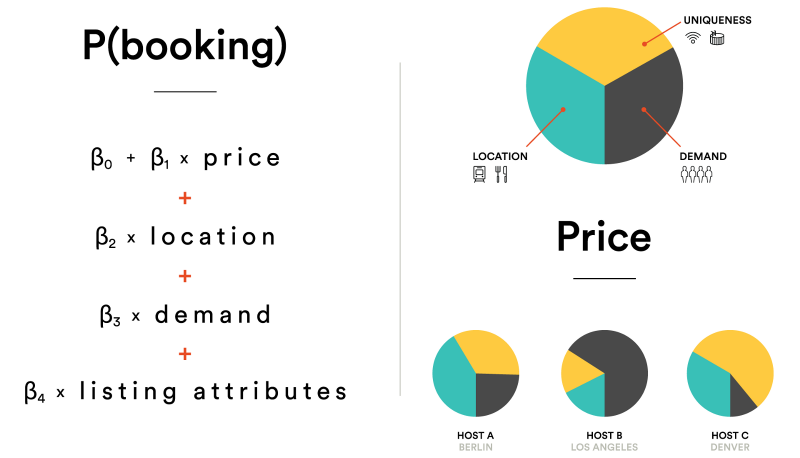

Pattern finding – AirBnb price suggestion for who rents his house

Anticipating user’s needs – Google Now

Coordinating complex environments – Amazon Echo

How many IX designers do I need to design a conversational UI like Siri, or Echo, or Facebook’s bots?

How do you design services based on algorithms?

Algorithms need a LOT of data. Unique data. Think to Spotify: they are the only one that have that kind of information about users’ musical tastes and preferences, and that is the intrinsic value.

The more layers of data you have (e.g. traffic, weather forecasts, bus timetable) you can correlate the data and find patterns. Data series and data sets sometimes need to be simplified, if there is too much noise or error. Sometimes this leads to better algorithms.

Machine learning is not magic, it’s engineering.

At AirBnb they created mixed cross functional teams (data analysts, interaction designers, programmers) sketching interfaces, producing data visualisations that then were used to understand what are the next steps and what other data they needed.

The elaboration of the algorithm developed for the Smart Pricing calculator done at AirBnb.

We make predictions, that are not ‘the’ reality. But in designing your algorithms you can chose how to be “wrong”: high bias (lower errors on a single value, but chances are your average is wrong) or high variance (on average you are right, but on a single value you have a larger error)

The next generation of IX designer will have to consider the etiquette and ethics of suggestions. So only correlations and data are not enough, you need the human intervention to interpret the data, and put the emotional and human factor in the service, in the (think to Eric Mayer with Facebook suggestion about his last year, when his daughter died of leukaemia). The solution: is to take human rules (the ones that apply when a human being interact with an other human being ) into this kind of interactions.

Natural language interfaces, need to be designed. And require a lot of knowledge about the psychology of conversation. E.g. a bank made his “bot” use the “we” instead of “I” when referring to himself, to stress to the user that it’s not a human agent. This changes the way people interacted with the bot.

Notice: anthropomorphisation raise the expectations of the user. He forgets that is interacting with a machine.

How did Spotify design their “Discover Weekly”? It’s a huge work of user observation/research/

Our core skills as designers are still valid. but we have to update our skills, knowledge, tools and practices.

Me: the value of a company moved from data (Google scraping the web), to information (Google ranking algorithms), to knowledge (Google self-driving cars). Jason Mesut: I add to know-how: you remove the data and you have algorithms that can elaborate any kind of data source?

IBM Watson

Ed Moffat (UK Design Manager, IBM Watson – @EPJMoffat)

Jeff Morgan (Design Lead, IBM Watson – @UX_John)

Is 92% accurate? It depends on what you measure against.

How to know if the robot that works as a human is doing well? Ask for feedback to the user (generally at the end of the task). But be careful, the results are often biased because only 5% (on average) gives a feedback. And thumbs up/down is a very bad way to collect user’s feedback (you get the result, but not the reason). I s better to use the conversation as a way to connect with the user, in a “human” way. An other option is use sentiment analysis, or repetitions of default dialog.

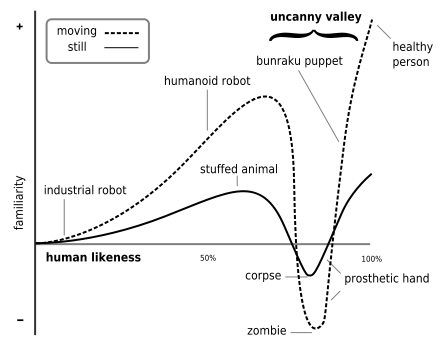

Once the accuracy is very hight, let’s say 100%, how do you make it looks like ‘cognitive’ (in a good way, like Wall-E) and not a scary creepy machine (like Terminator).

Familiarity vs Human Likeness (“uncanny valley” chart by Professor Masahiro Mori as Bukimi no Tani Gensho, 1970, now revised to include the “interaction”, not only the movement). The chart is different if the bot is still or in motion. And even more different if is interactive.

How do you train an AI like Watson, in the real world (not like in the movies)? in a good way that looks like cognitive and is not creepy? The idea is that “Watson Knows Best”. Watson does the large part of the job, and relies on humans only to cover the missing areas/part of knowledge that he can’t cover.